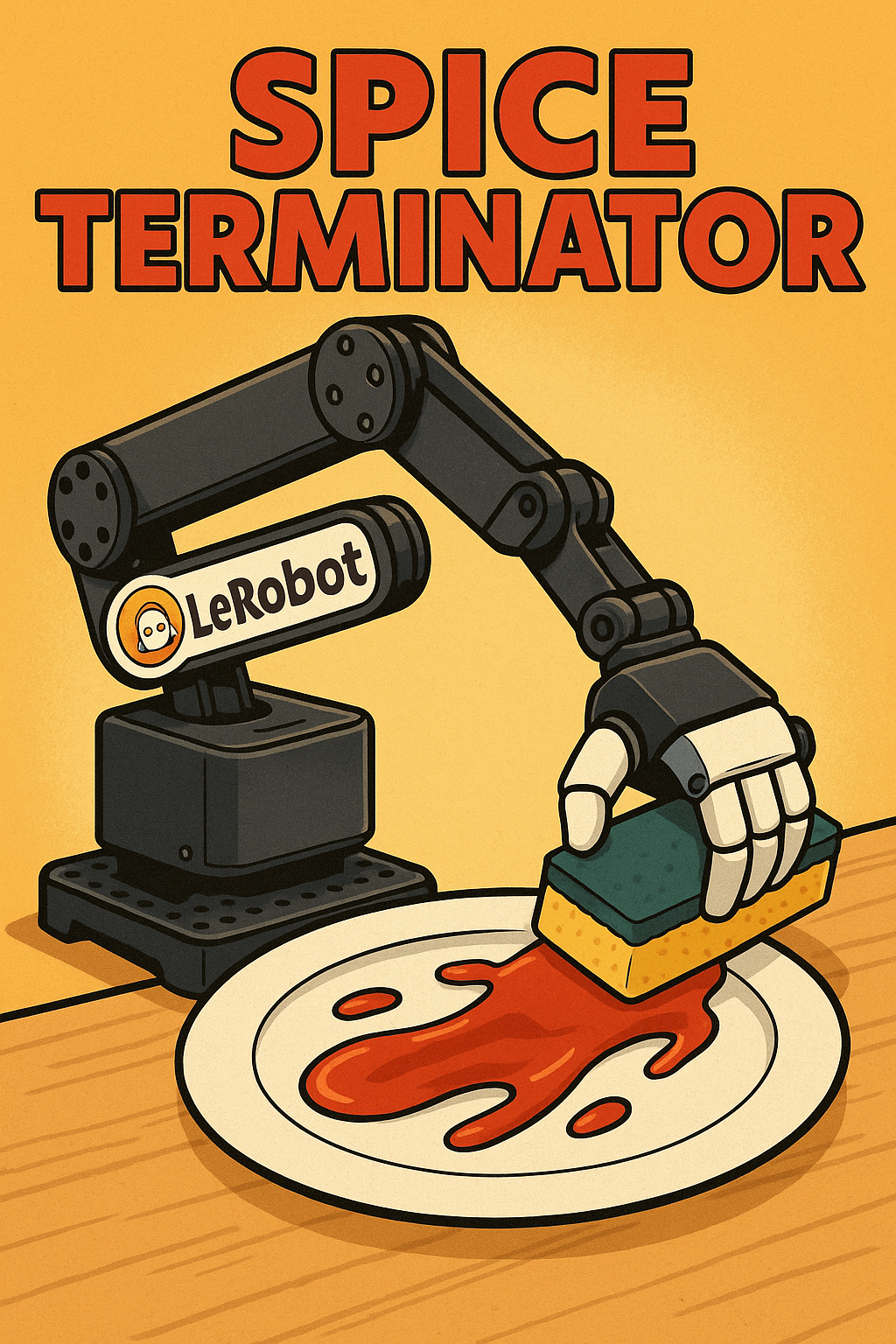

Spice Terminator- SO101 Arm That Wipes Plates

Building a Robot Arm that wipes plates

Cleaning dishes is genuinely one of the most annoying yet unavoidable parts of the day. You come home, tired after work. Maybe you gather enough energy to cook yourself a decent meal. But the moment you’re done eating, the last thing you want to do is clean up.

So… what if you could teach a robot to do it for you?

What if you could sit in your room, watch it in action, and make sure it doesn’t break something?

What if you could deploy a bunch of them in kitchens or factories, train them remotely, and just monitor the results?

That’s what we built — and it ended up winning us Most Novel Solution at the HuggingFace LeRobot hackathon.

We call it Spice Terminator — a robot powered by imitation learning that wipes plates squeaky clean.

Setting

Hugging Face organized the LeRobot Worldwide Hackathon 2025 — a global celebration of AI-powered robotics. It was open to all, from hardcore robotics folks to curious tinkerers. The London chapter, hosted by SoTA, was where we joined in. We took part in the Imitation Learning track, where the challenge was to teach robots how to complete real-world tasks through demonstrations. Our chosen mission: teaching it to clean dishes.

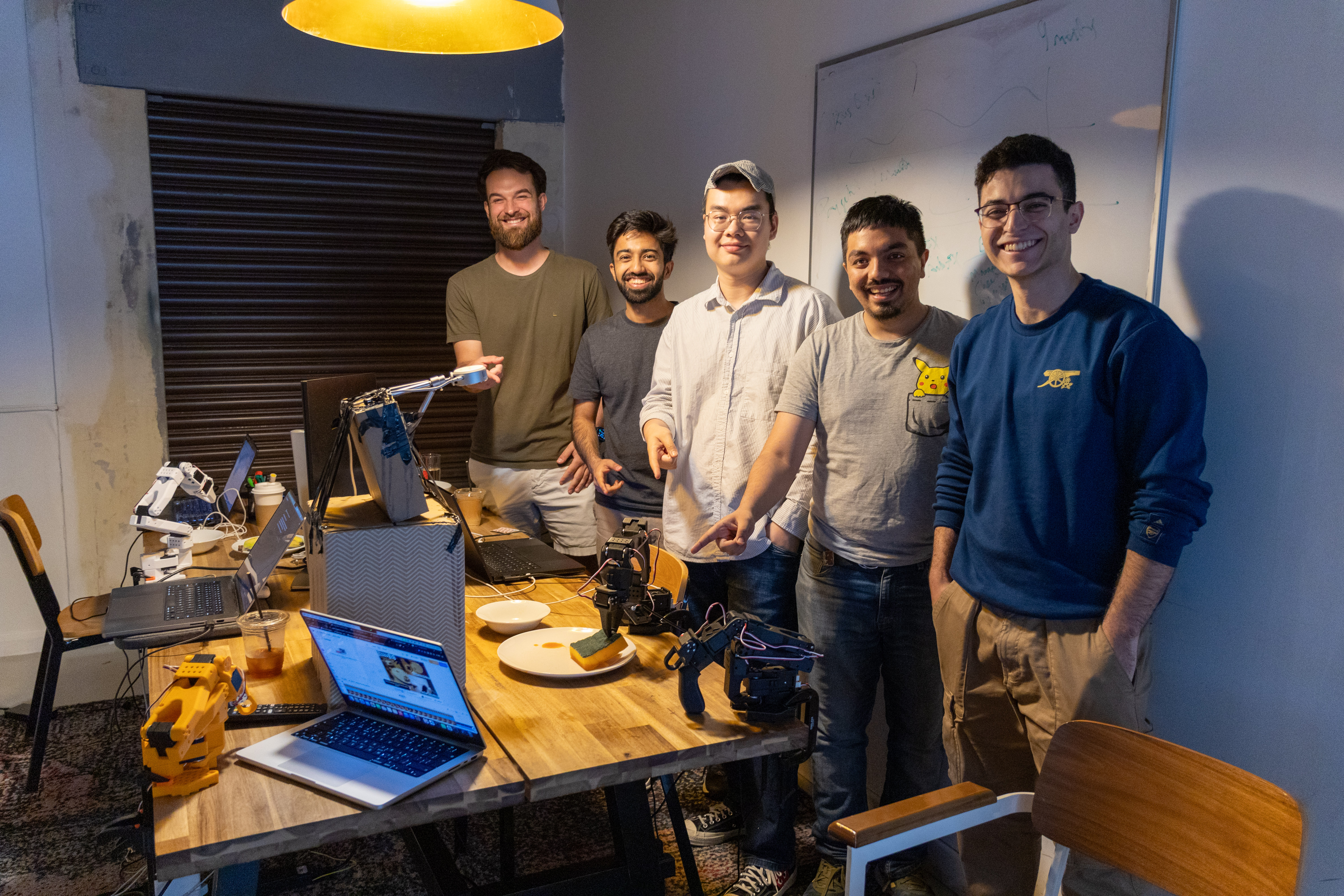

Meet the Team

Our team had a diverse background — different skill sets, but all aligned in motivation.

I work as an Applied Scientist at Flawless AI, building lip sync tools for film production.

William, a seasoned founder and product manager, brought structure and clarity to our project.

Sanan, a Robotics Master’s student at UCL, had hands-on experience with vision-language architectures.

Atharv and Bill, both Research Engineers, had already worked with the SO-101 embodiment and brought the hardware expertise to the table.

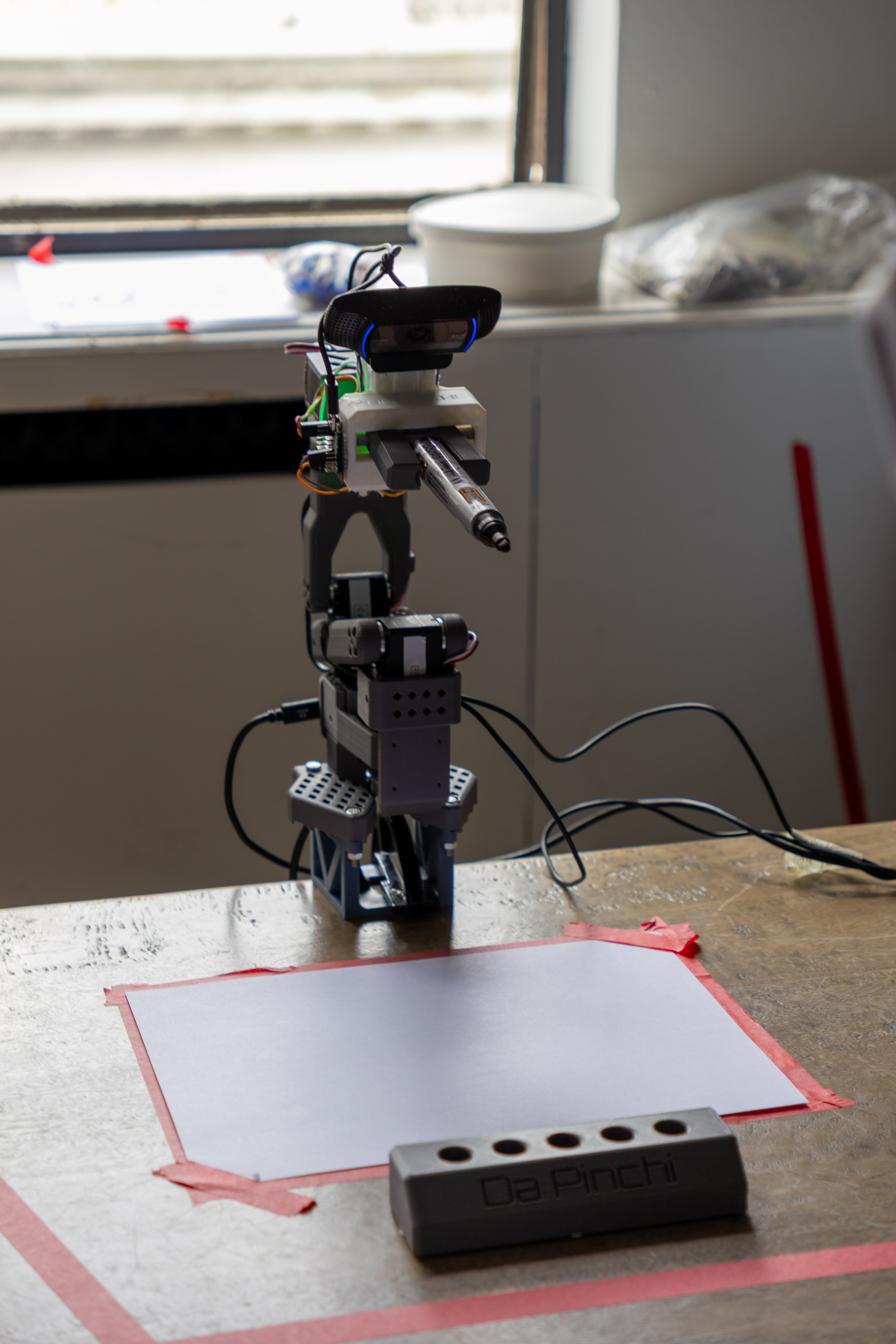

We had access to two pairs of SO-101 arms — one acting as the leader, the other as the follower. The follower had a camera mounted, and we also had an Intel RealSense camera mounted overhead.

For William and me, this was the first time working with embodied agents in the physical world. And I won’t lie — I felt like a kid in a magic show. Watching the robot arms move and respond, I was just standing there with my jaw dropped. It felt unreal.

Toolbox

The SO‑101 is the upgraded version of the SO‑100 arm, developed by RobotStudio and Hugging Face. It’s designed to work with the open-source LeRobot library and costs around $130 (excluding 3D-printed parts). Affordable and open — a nice combo. For intelligence, we leaned on vision-language models. These models understand both visuals and instructions, and can generalize actions across different robot embodiments. We used NVIDIA-Groot 1.5 and SmolVLA for training our robot. Our setup included:

- A leader-follower SO-101 pair

- A wrist-mounted camera on the follower

- An overhead Intel RealSense camera

The environment? A plate with red sauce on it, a bowl with a wet sponge, and some clutter we threw in to make things harder like spoons, cans, tissues, whatever we could find. The task sounded simple on paper: pick up the sponge, wipe the sauce off the plate, and put the sponge back in the bowl. But in practice? Way harder than it looks

Challenges for this robot arm would be:

- Pick the sponge correctly from the edge which was slippery.

- Align the sponge with the plate based on how it picked it up.

- Sauce could spread while wiping, so the robot needed to track progress.

- Sometimes the robot arm would occlude the top camera thus no visibility.

- Finally, it had to decide whether the plate was clean “enough” and we have no precise metric, just learning.

We even moved the plate mid-task, added more sauce, and tested how well it adapted. The robot had to generalize where the plate, sponge, and bowl were placed and ignore other objects.

Build Phase

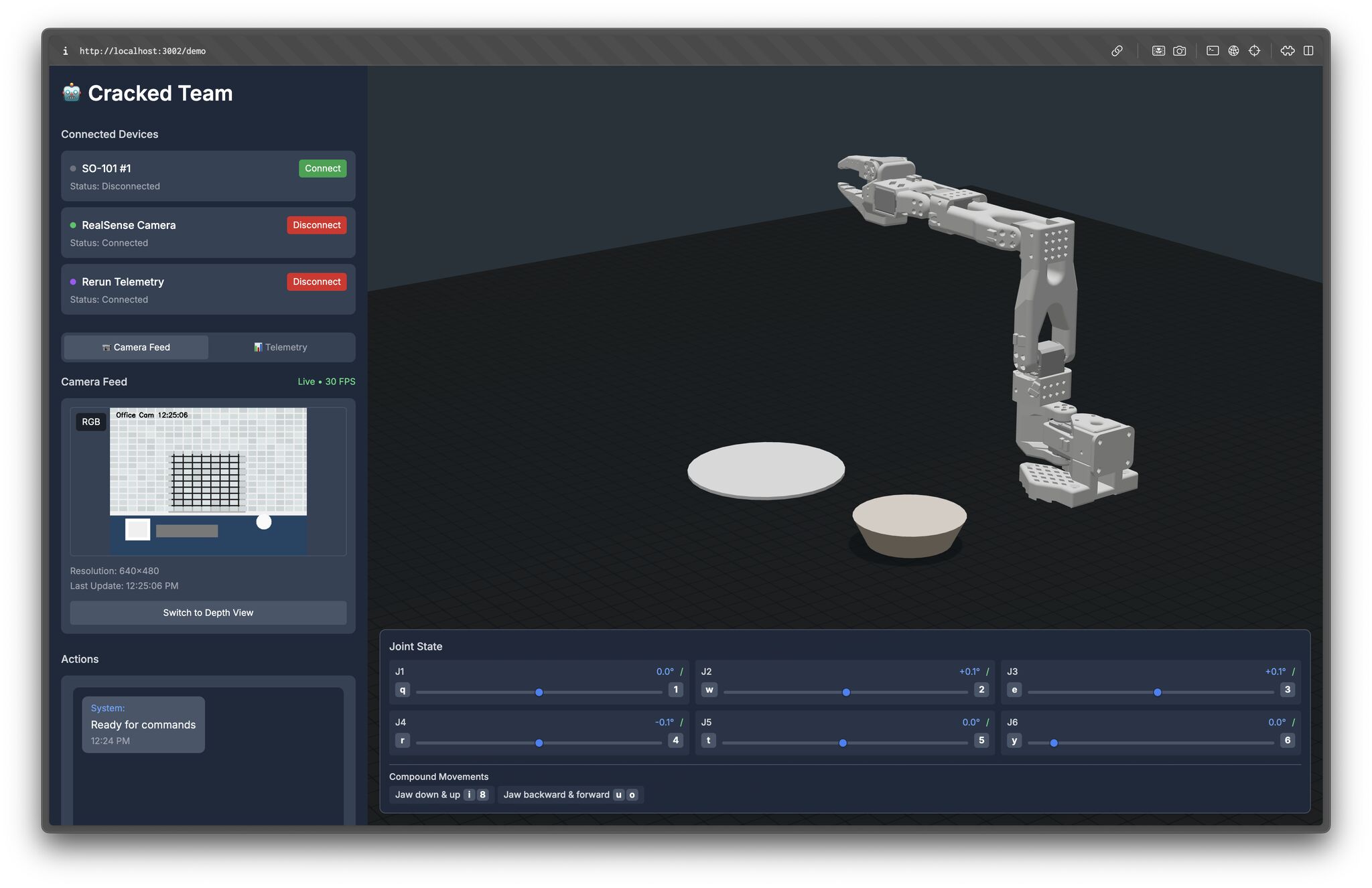

This was my favorite part. During planning, Will took the lead — outlining goals, defining OKRs, and keeping us grounded. He proposed an amazing devtool idea: a browser-based interface to visualize and control the robot. It turned out to be a huge asset.

On the day of the hackathon:

- Atharv and Bill handled hardware setup.

- Sanan calibrated the robots with laser-sharp focus (seriously, no false moves).

- Me and Bill worked on designing the experiment space and prepping props.

- Sanan and Atharv began exploring the VLA models and got the initial pipelines and environments running.

- Will developed the Web interface and tested thoroughly

Me and Bill took charge of collecting the demonstration data. I started by teleoperating the leader arm — guiding it to pick the sponge, wipe the plate, and return the sponge to the bowl. After the first 25 episodes, Bill took over, and honestly, he was a natural. He was super smooth with the arm, and by the end, we had collected 75 episodes across varying levels of difficulty. Then I worked on sorting the data and fine-tuning Gr00t1.5 model while Sanan finetuned SmolVLA. Atharv then ensured that the inference code worked and it didnt break the robot. He also took care of anything going wrong.

There were hiccups, of course — dependency hell, broken imports, and unexpected json naming. But eventually, the robot learned to clean the plate.

The first time it worked, we just froze. Then the cheers came. Seeing it actually execute a full clean felt like magic.

Demo Day Chaos

Now, as with any real robotics demo, things didn’t go perfectly. Once we hit “record,” the robot started acting up:

- Picked the bowl instead of the sponge.

- Wiped the wrong part of the plate.

- Got stuck mid-task.

- Didnt want to leave the plate.

But after some careful adjustments, it worked. It picked the sponge, wiped the sauce, and returned the sponge. We clapped every single time it worked. It was like watching a close game on TV with fingers crossed.

We even tested it on a greasy paper lunchbox top — and it wiped that too!

Presentation

During the showcase, every team brought their A-game. They built projects like :

- Robot pipetting liquid into test tubes and picking the whole stack.

- Drawing with a custom robot arm.

- Robots that played chess.

- Arm that picked bread and put it into a toaster.

- Arm that picked garbage off a conveyor belt.

- Robot that scanned the environment and figured the shortest route.

People designed and 3D printed custom grippers, vision rigs, and environments. The level of execution across the board was genuinely inspiring. Every single project was jaw dropping and honestly it was a privilege to see it live.

Awards

We ended up tying for Most Novel Solution. While our problem was simpler, the creativity and robustness of our approach really stood out. Everyone brought something unique. But I think what helped us stand out was:

- Tight team roles

- Working, adaptive demo

- Clear, testable problem

I had learned from a previous hackathon how critical it is to optimize the demo, not just the codebase. That definitely paid off this time.

Final Thoughts

This was easily one of the best weekends I’ve had in a long time. The team vibe was spot-on — collaborative, efficient, and always fun. Huge shoutout to the SOTA organizers for creating such a welcoming, high-energy atmosphere. The food was great, the people were amazing, and the projects were next level. They were super helpful and gave us access to Ori GPUs and any hardware we wanted at a moments’ whim. I’m already looking forward to the next LeRobot hackathon.